This website contains information about my research, publications, and projects during my Ph.D. I am now a

fulltime software engineer and am no longer updating this website. The publications page is up to date as of 2/9/23 and includes my dissertation.

If you would like to contact me, please email me using the "Contact" link above (I am more than happy to

talk about my research/Ph.D.).

I successfully defended my dissertation on 2/9/23 and am an incoming remote Ph.D. intern at

iRobot focusing on ROS2 development from February-July 2023. Once my internship is complete, I

am looking for a full-time software development position either based in Chicago or remote +

open to travel. I am interested in software architecture and user experience.

My background is in software development (C++, C#, Python) focused on robotics (ROS/ROS2/ROS#),

games (Unity), and augmented reality (MRTK, ARKit, ARCore). My core projects are open-source and

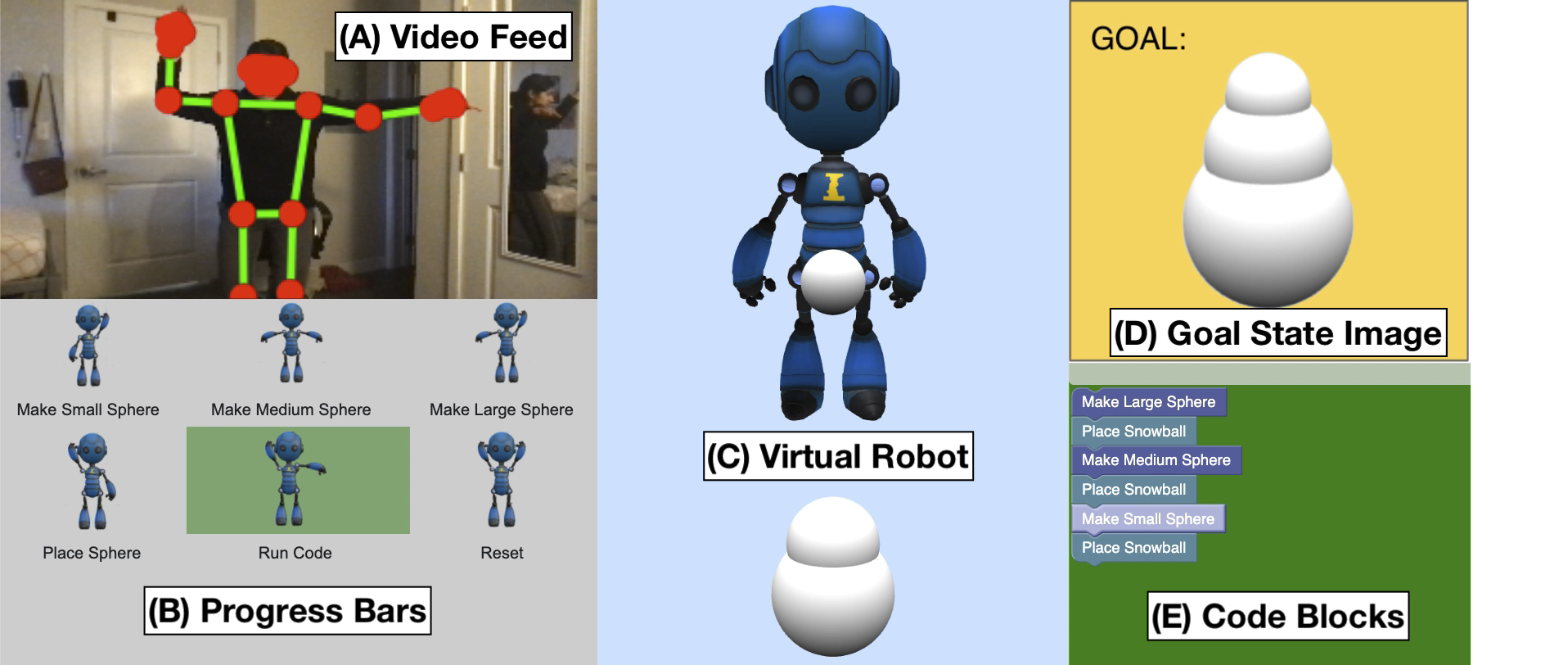

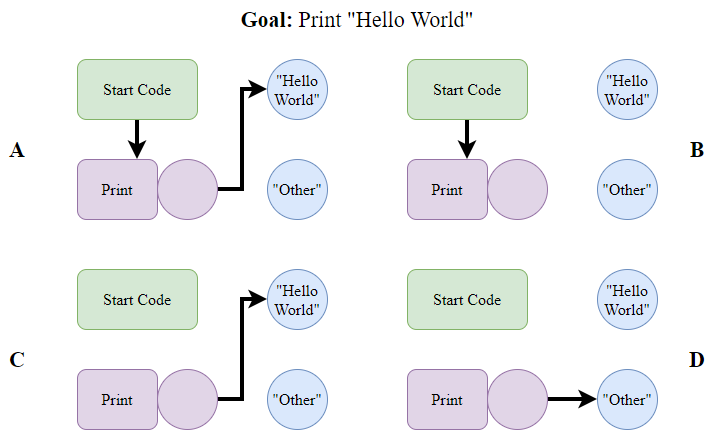

include a custom AR visual programming language - MoveToCode - with an autonomous robot tutor that

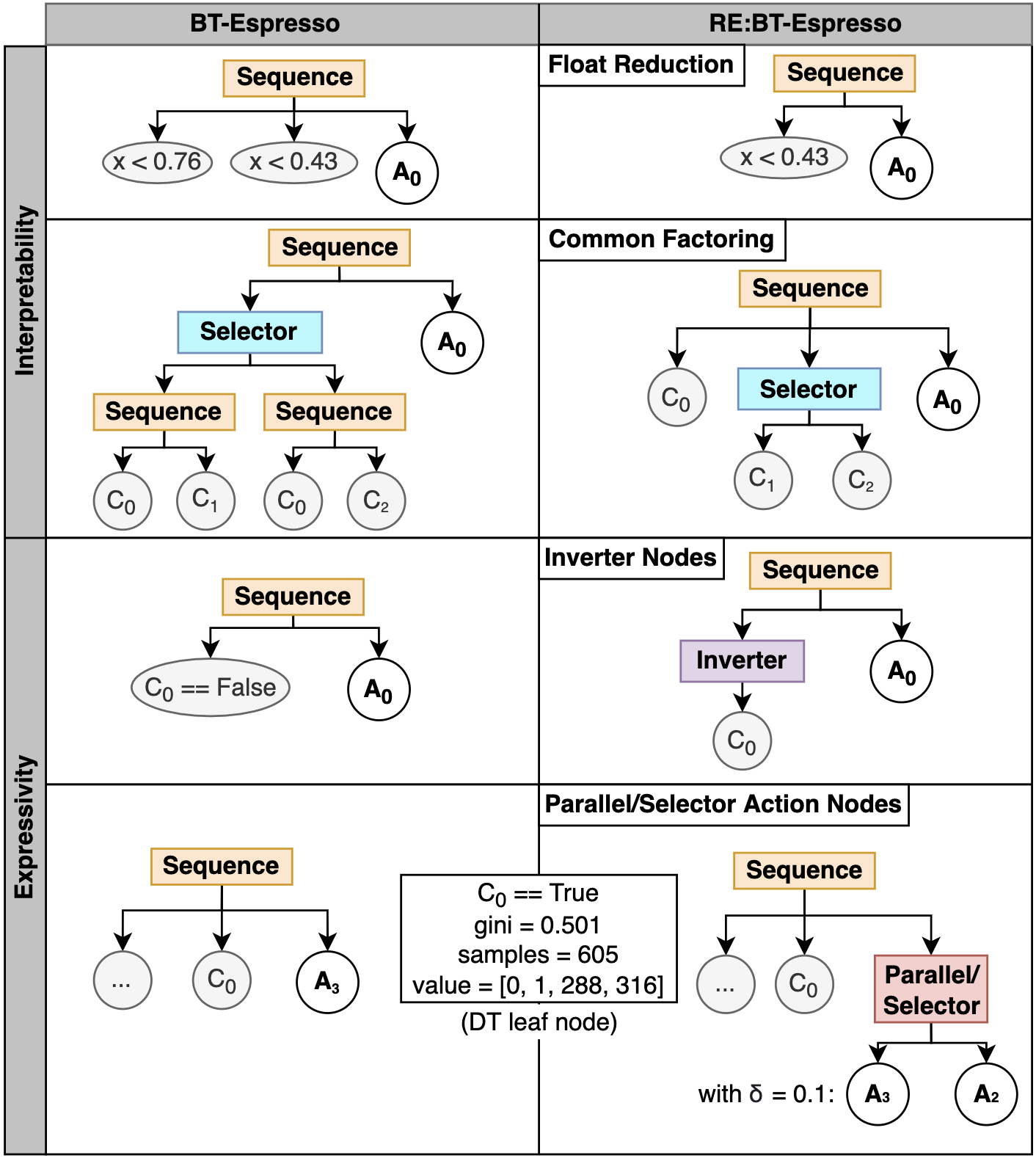

responds to student curiosity and a learning behavior trees from demonstration pipeline -

RE-BT-Espresso. (Github)

- Languages: C#, C++, Python, Javascript, R, Bash

- Tools: Unity, Robot Operating System (ROS), RosSharp (ROS#), Mixed Reality

Toolkit (MRTK), Jupyterlab (pandas, seaborn, sklearn)

For research, I co-authored three funded grants with my Ph.D. advisor Maja Matarić (~$1.55

million total funding), published 20 papers (2 Journal, 9 Conference, 9 Workshop), and mentored

26 Master's, undergraduate, and high school students. These students first-authored 7 papers,

won 7 undergraduate research awards, were nominated for 2 best paper conference awards,

contributed code to our open-source projects, and were co-authors on the majority of my papers.

University of

Southern California - Los Angeles

University of

Southern California - Los Angeles University of

Michigan - Ann Arbor

University of

Michigan - Ann Arbor